The dichotomy of Vision and Language

Lex Fridman and Ilya Sutskever had an interesting AI Podcast at https://www.youtube.com/watch?v=xoVibFYi1Gs

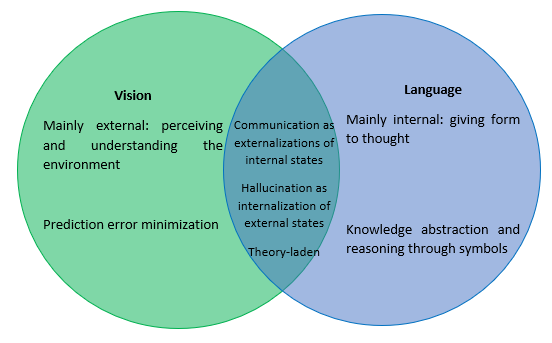

An interesting question raised was where does vision starts and language ends, or viceversa. Here is a Venn Diagram which illustrates my opinion on this dichotomy:

I think our brain is not a monolith, in that there is a single algorithm underlying our perception, ability to speak a language, capacity for motor-control, memory, among others. Neuroscience divides and assigns different regions of the brains to different capacities. This is why patients, such as patient H. M, who have localized cerebral lesions may lose the capacity for memory, but not to speak.

Given this premise, I think vision and language consitute such an example of capacities which are entangled in our mind (consider reading a book, where both faculties are active as you’re perceiving sentences from a page of it). However, despite entangled, I think we can try to unthread them, partly because there are also significant differences between them.

I mainly associate our perceptual system with processing the external real world. I could also associate it with an internal imagery that reflects the real world, e.g., we can see memories of a trip somewhere in the past. I associate language, however, not with imagery but rather with the manipulation of symbols. Specifically, I think language is intricately tied to the manipulation of numbers, and being able to think of math. I think the linguistic capability to think of math can be distinguished from the perceptual system mainly because math doesn’t exist in the real world, but rather in the abstract world.

Enjoy Reading This Article?

Here are some more articles you might like to read next: